Publications

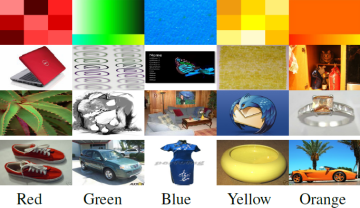

B. Schauerte, R. Stiefelhagen, "How the Distribution of Salient Objects in Images Influences Salient Object Detection". In Proceedings of the 20th International Conference on Image Processing (ICIP), Melbourne, Australia, September 15-18, 2013.

|

Abstract: We investigate the spatial distribution of salient objects in images. First, we empirically show that the centroid locations of salient objects correlate strongly with a centered, half-Gaussian model. This is an important insight, because it provides a justification for the integration of such a center bias in salient object detection algorithms. Second, we assess the influence of the center bias on salient object detection. Therefore, we integrate an explicit center bias into Cheng’s state-of-the-art salient object detection algorithm. This way, first, we quantify the influence of the Gaussian center bias on salient object detection, second, improve the performance with respect to several established evaluation measures, and, third, derive a state-of-the-art unbiased salient object detection algorithm.

|

Keywords: Salient Object Detection, Spatial Distribution, Photographer Bias, Center Bias

Download: [ pdf - draft] [ bibtex] [ code #1 - Locally Debiased Region Contrast] [ code #2 - Distribution Analysis] [ code #3 - Basic Evaluation Script]

Selected Related Publications:

|

M. Martinez, B. Schauerte, R. Stiefelhagen, "BAM! Depth-based Body Analysis in Critical Care". In Proceedings of the 15th International Conference on Computer Analysis of Images and Patterns (CAIP), York, UK, August 27-29, 2013.

|

Abstract: We investigate computer vision methods to monitor Intensive Care Units (ICU) and assist in sedation delivery and accident prevention. We propose the use of a Bed Aligned Map (BAM) to analyze the patient’s body.We use a depth camera to localize the bed, estimate its surface and divide it into 10 cm x 10 cm cells. Here, the BAM represents the average cell height over the mattress. This depth-based BAM is independent of illumination and bed positioning, improving the consistency between patients. This representation allow us to develop metrics to estimate bed occupancy, body localization, body agitation and sleeping position. Experiments with 23 subjects show an accuracy in 4-level agitation tests of 88% and 73% in supine and fetal positions respectively, while sleeping position was recognized with a 100% accuracy in a 4-class test.

|

Keywords: Depth Camera, Critical Care, Monitoring, Agitation, Sleeping Position

Download: [ pdf] [ bibtex]

Selected Related Publications:

|

D. Koester, B. Schauerte, R. Stiefelhagen, "Accessible Section Detection For Visual Guidance". In IEEE Workshop on Multimodal and Alternative Perception for Visually Impaired People (MAP4VIP), San Jose, CA, USA, July 15-19, 2013.

|

Abstract: We address the problem of determining the accessible section in front of a walking person. In our definition, the accessible section is the spatial region that is not blocked by obstacles. For this purpose, we use gradients to calculate surface normals on the depth map and subsequently determine the accessible section using these surface normals. We demonstrate the effectiveness of the proposed approach on a novel, challenging dataset. The dataset consists of urban outdoor and indoor scenes that were recorded with a handheld stereo camera.

|

Keywords: Visually Impaired, Computer Vision, Obstacle Detection, Guidance, Navigation

Download: [ bibtex] [ code #1 - BVS] [ code #2 - BVS modules]

Selected Related Publications:

|

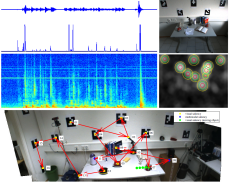

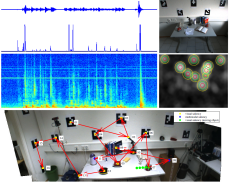

B. Schauerte, R. Stiefelhagen, "Wow! Bayesian Surprise for Salient Acoustic Event Detection". In Proceedings of the 38th International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Vancouver, Canada, May 26-31, 2013.

|

Abstract: We extend our previous work and present how Bayesian surprise can be applied to detect salient acoustic events. Therefore, we use the Gamma distribution to model each frequencies spectrogram distribution. Then, we use the Kullback-Leibler divergence of the posterior and prior distribution to calculate how "unexpected" and thus surprising newly observed audio samples are. This way, we are able to efficiently detect arbitrary, unexpected and thus surprising acoustic events. Complementing our qualitative system evaluations for (humanoid) robots, we demonstrate the effectiveness and practical applicability of the approach on the CLEAR 2007 acoustic event detection data.

|

Keywords: Acoustic Event Detection, Acoustic Saliency, Salient Event Detection

Download: [ pdf - draft] [ bibtex] [ code #1 - Gaussian and Gamma surprise / auditory saliency] [ poster]

Selected Related Publications:

|

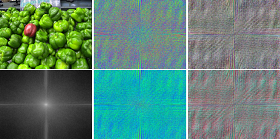

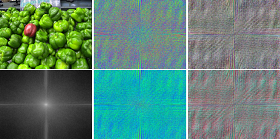

B. Schauerte, R. Stiefelhagen, "Quaternion-based Spectral Saliency Detection for Eye Fixation Prediction". In Proceedings of the 12th European Conference on Computer Vision (ECCV), Firenze, Italy, October 7-13, 2012.

|

Abstract: In recent years, several authors have reported that spectral saliency detection methods provide state-of-the-art performance in predicting human gaze in images. We systematically integrate and evaluate quaternion DCT- and FFT-based spectral saliency detection, weighted quaternion color space components, and the use of multiple resolutions. Furthermore, we propose the use of the eigenaxes and eigenangles for spectral saliency models that are based on the quaternion Fourier transform. We demonstrate the outstanding performance on the Bruce-Tsotsos (Toronto), Judd (MIT), and Kootstra-Schomacker eye-tracking data sets.

|

Keywords: Spectral Saliency, Quaternion; Multi-Scale, Color Space, Quaternion Component Weight, Quaternion Axis; Attention; Human Gaze, Eye-Tracking; Bruce-Tsotsos (Toronto), Judd (MIT), and Kootstra-Schomacker data set

Download: [ pdf] [ bibtex] [ code #1 - visual saliency toolbox] [ code #2 - Matlab AUC measure implementation] [ poster (1)] [ poster (2)]

Selected Related Publications:

|

B. Kühn, B. Schauerte, K. Kroschel, R. Stiefelhagen, "Multimodal Saliency-based Attention: A Lazy Robot’s Approach".

In Proceedings of the 25th International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Algarve, Portugal, October 7-12, 2012.

|

Abstract: We extend our work on an integrated object-based system for saliency-driven overt attention and knowledge-driven object analysis. We present how we can reduce the amount of necessary head movement during scene analysis while still focusing all salient proto-objects in an order that strongly favors proto-objects with a higher saliency. Furthermore, we integrated motion saliency and as a consequence adaptive predictive gaze control to allow for efficient gazing behavior on the ARMAR-III robot head. To evaluate our approach, we first collected a new data set that incorporates two robotic platforms, three scenarios, and different scene complexities. Second, we introduce measures for the effectiveness of active overt attention mechanisms in terms of saliency cumulation and required head motion. This way, we are able to objectively demonstrate the efffectiveness of the proposed multicriterial focus of attention selection.

|

Keywords: Exploration Path, Optimization, Active Perception, Saliency-based Overt Attention, Scene Exploration

Download: [ pdf] [ bibtex]

Selected Related Publications:

|

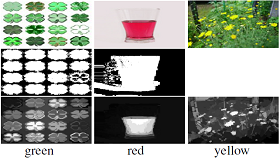

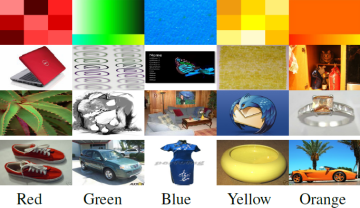

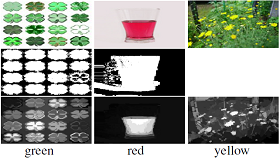

B. Schauerte, R. Stiefelhagen, "Learning Robust Color Name Models from Web Images". In Proceedings of the 21st International Conference on Pattern Recognition (ICPR), Tsukuba, Japan, November 11-15, 2012.

|

Abstract: We use images that have been collected using an Internet search engine to train color name models for color naming and recognition tasks. Considering color histogram bands as being words of an image and the color names as classes, we use the supervised latent Dirichlet allocation to train our model. To pre-process the training data, we use state-of-the art salient object detection and a Kullback–Leibler divergence based outlier detection. In summary, we achieve state-of-the-art performance on the eBay data set and improve the similarity between labels assigned by our model and human observers by approximately 14 %.

|

Keywords: Color Terms; Color Naming; Web-based Learning; Supervised Latent Dirichlet Allocation (SLDA); Human-Robot Interaction

Download: [ pdf] [ bibtex] [ data set #1 - Google-512] [ code #1 - Locally Debiased Region Contrast] [ code #2 - Histogram Distances] [ poster]

Selected Related Publications:

|

B. Kühn, B. Schauerte, R. Stiefelhagen, K. Kroschel, "A Modular Audio-Visual Scene Analysis and Attention System for Humanoid Robots". In Proceedings of the 43rd International Symposium on Robotics (ISR), Taipei, Taiwan, August 29-31, 2012.

|

Abstract: We present an audio-visual scene analysis system, which is implemented and evaluated on the ARMAR-III robot head. The modular design allows a fast integration of new algorithms and adaptation on new hardware. Further benefits are automatic module dependency checks and determination of the execution order. The integrated world model manages and serves the acquired data for all modules in a consistent way. The system has a state of the art performance in localization, tracking and classification of persons as well as exploration of whole scenes and unknown items. We use multimodal proto-objects to model and analyze salient stimuli in the environment of the robot to realize the robots’ attention.

|

Keywords: Scene Analysis, Hierarchical Entity-based Exploration, Audio-Visual Saliency-based Attention, World Model; Humanoid Robot

Download: [ pdf] [ bibtex]

Selected Related Publications:

|

B. Schauerte, M. Martinez, A. Constantinescu, R. Stiefelhagen, "An Assistive Vision System for the Blind that Helps Find Lost Things". In Proceedings of the 13th International Conference on Computers Helping People with Special Needs (ICCHP), Springer, Linz, Austria, July 11-13, 2012.

|

Abstract: We present a computer vision system that helps blind people find lost objects. To this end, we combine color- and SIFT-based object

detection with sonification to guide the hand of the user towards potential target object locations. This way, we are able to guide the user’s attention and effectively reduce the space in the environment that needs to be explored. We verified the suitability of the proposed system in a user study.

|

Keywords: Computer Vision for the Blind, Assistive Technologies; Object Recognition, Color Naming; Sonification; Interactive Object Search, Scene Exploration

Download: [ pdf] [ bibtex]

Selected Related Publications:

|

B. Schauerte, R. Stiefelhagen, "Predicting Human Gaze using Quaternion DCT Image Signature Saliency and Face Detection". In Proceedings of the 12th IEEE Workshop on the Applications of Computer Vision (WACV) / IEEE Winter Vision Meetings, Breckenridge, CO, USA, January 9-11, 2012.

(Best Student Paper Award)

|

Abstract: We combine and extend the previous work on DCT-based image signatures and face detection to determine the visual saliency. To this end, we transfer the scalar definition of image signatures to quaternion images and thus introduce a novel saliency method using quaternion type-II DCT image signatures. Furthermore, we use MCT-based face detection to model the important influence of faces on the visual saliency using rotated elliptical Gaussian weight functions and evaluate several integration schemes. In order to demonstrate the performance of the proposed methods, we evaluate our approach on the Bruce-Tsotsos (Toronto) and Cerf (FIFA) benchmark eye-tracking data sets. Additionally, we present evaluation results on the Bruce-Tsotsos data set of the most important spectral saliency approaches. We achieve state-of-the-art results in terms of the well-established area under curve (AUC) measure on the Bruce-Tsotsos data set and come close to the ideal AUC on the Cerf data set - with less than one millisecond to calculate the bottom-up QDCT saliency map.

|

Keywords: Spectral Saliency, Quaternion, DCT Image Signatures; MCT Face Detection; Attention; Human Gaze, Eye-Tracking; Bruce-Tsotsos (Toronto) and Cerf (FIFA) data set

Download: [ pdf] [ bibtex] [ code #1 - visual saliency toolbox] [ code #2 - Matlab AUC measure implementation] [ data set #1 - Cerf/FIFA face detections]

Selected Related Publications:

|

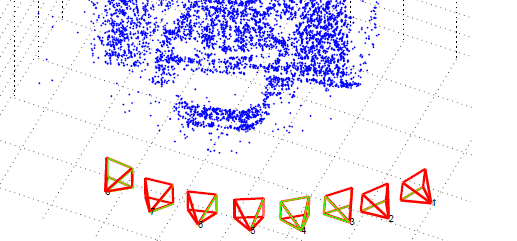

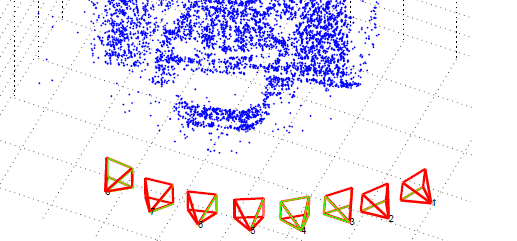

H. Jaspers, B. Schauerte, G. A. Fink, "SIFT-based Camera Localization using Reference Objects for Application in Multi-Camera Environments and Robotics". In Proceedings of the 1st International Conference on Pattern Recognition Applications and Methods (ICPRAM), Vilamoura, Algarve, Portugal, February 6-8, 2012.

|

Abstract: We present a unified approach to improve the localization and the perception of a robot in a new environment by using already installed cameras. Using our approach we are able to localize arbitrary cameras in multi-camera environments while automatically extending the camera network in an online, unattended, real-time way. This way, all cameras can be used to improve the perception of the scene, and additional cameras can be added in real-time, e.g., to remove blind spots. [...] we use it to iteratively calibrate the camera network as well as to localize arbitrary cameras, e.g. of mobile phones or robots, inside a multi-camera environment. [...]

|

Keywords: Camera Pose Estimation, Camera Calibration; Scale Ambiguity; SIFT; Multi-Camera Environment, Smart Room, Robot Localization

Download: [ pdf] [ bibtex] [ code #1] [ data set #1]

Selected Related Publications:

|

B. Schauerte, B. Kühn, K. Kroschel, R. Stiefelhagen, "Multimodal Saliency-based Attention for Object-based Scene Analysis".

In Proceedings of the 24th International Conference on Intelligent Robots and Systems (IROS), IEEE/RSJ, San Francisco, CA, USA, September 25-30, 2011.

|

Abstract: Multimodal attention is a key requirement for humanoid robots in order to navigate in complex environments and act as social, cognitive human partners. To this end, robots have to incorporate attention mechanisms that focus the processing on the potentially most relevant stimuli while controlling the sensor orientation to improve the perception of these stimuli. In this paper, we present our implementation of audio-visual saliency-based attention that we integrated in a system for knowledge-driven audio-visual scene analysis and object-based world modeling. For this purpose, we introduce a novel isophote-based method for proto-object segmentation of saliency maps, a surprise-based auditory saliency definition, and a parametric 3-D model for multimodal saliency fusion. The applicability of the proposed system is demonstrated in a series of experiments.

|

Keywords: Multimodal, Audio-Visual Attention; Auditory Surprise; Isophote-based Visual Proto-Objects; Parametric 3-D Saliency Model and Fusion; Object-based Inhibition of Return; Object-based Scene Exploration and Hierarchical Analysis

Download: [ pdf] [ bibtex] [ code #1 - visual saliency toolbox] [ code #2 - windowed Gaussian surprise / auditory saliency]

Selected Related Publications:

|

B. Schauerte, G. A. Fink, "Web-based Learning of Naturalized Color Models for Human-Machine Interaction".

In Proceedings of the 12th International Conference on Digital Image Computing: Techniques and Applications (DICTA), IEEE, Sydney, Australia, December 1-3, 2010.

|

Abstract: In recent years, natural verbal and non-verbal human-robot interaction has attracted an increasing interest. Therefore, models for robustly detecting and describing visual attributes of objects such as, e.g., colors are of great importance. However, in order to learn robust models of visual attributes, large data sets are required. Based on the idea to overcome the shortage of annotated training data by acquiring images from the Internet, we propose a method for robustly learning natural color models. Its novel aspects with respect to prior art are: firstly, a randomized HSL transformation that reflects the slight variations and noise of colors observed in real-world imaging sensors; secondly, a probabilistic ranking and selection of the training samples, which removes a considerable amount of outliers from the training data. [...]

|

Keywords: Color Terms; Color Naming; Web-based Learning; Natural vs. Web-based Image Statisticsi; Domain Adaptation; Probabilistic HSL Model; Probabilistic Latent Semantic Analysis (pLSA); Human-Robot Interaction

Download: [ pdf] [ bibtex] [ data set #1 - Google-512] [ poster] [ report]

Selected Related Publications:

|

B. Schauerte, G. A. Fink, "Focusing Computational Visual Attention in Multi-Modal Human-Robot Interaction".

In Proceedings of the 12th International Conference on Multimodal Interfaces (ICMI)1, ACM, Beijing, China, November 8-12, 2010.

(Doctoral Spotlight; Google Travel Grant)

|

Abstract: Identifying verbally and non-verbally referred-to objects is an important aspect of human-robot interaction. Most importantly, it is essential to achieve a joint focus of attention and, thus, a natural interaction behavior. In this contribution, we introduce a saliency-based model that reflects how multi-modal referring acts influence the visual search, i.e. the task to find a specific object in a scene. Therefore, we combine positional information obtained from pointing gestures with contextual knowledge about the visual appearance of the referred-to object obtained from language. The available information is then integrated into a biologically-motivated saliency model that forms the basis for visual search. We prove the feasibility of the proposed approach by presenting the results of an experimental evaluation.

|

Keywords: Modulatable Neuron-based, Phase-based, Spectral Whitening Saliency; Attention; Visual Search; Objects; Color; Shared Attention, Joint Attention; Multi-Modal Interaction, Gestures, Pointing, Language; Deictic Interaction; Spoken Human-Robot Interaction

Download: [ pdf] [ bibtex]

Selected Related Publications:

|

B. Schauerte, J. Richarz, G. A. Fink, "Saliency-based Identification and Recognition of Pointed-at Objects".

In Proceedings of the 23rd International Conference on Intelligent Robots and Systems (IROS), IEEE/RSJ, Taipei, Taiwan, October 18-22, 2010.

|

Abstract: When persons interact, non-verbal cues are used to direct the attention of persons towards objects of interest. Achieving joint attention this way is an important aspect of natural communication. Most importantly, it allows to couple verbal descriptions with the visual appearance of objects, if the referred-to object is non-verbally indicated. In this contribution, we present a system that utilizes bottom-up saliency and pointing gestures to efficiently identify pointed-at objects. Furthermore, the system focuses the visual attention by steering a pan-tilt-zoom camera towards the object of interest and thus provides a suitable model-view for SIFT-based recognition and learning.

|

Keywords: Spectral Residual Saliency, Spectral Whitening Saliency; Joint/Shared Attention, Pointing Gestures; Object Detection and Learning; Maximally Stable Extremal Regions (MSER); Scale-Invariant Feature Transform (SIFT); Active Pan-Tilt-Zoom Camera; Human-Robot Interaction

Download: [ pdf] [ bibtex] [ errata]

Selected Related Publications:

|

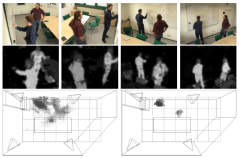

B. Schauerte, J. Richarz, T. Plötz, C. Thurau, G. A. Fink, "Multi-Modal and Multi-Camera Attention in Smart Environments".

In Proceedings of the 11th International Conference on Multimodal Interfaces (ICMI)1, pp. 261-268, ACM, Cambridge, MA, USA, November 2-4, 2009.

(Outstanding Student Paper Award Finalist; MERL/NSF Travel Grant)

|

Abstract: This paper considers the problem of multi-modal saliency and attention. Saliency is a cue that is often used for directing attention of a computer vision system, e.g., in smart environments or for robots. Unlike the majority of recent publications on visual/audio saliency, we aim at a well grounded integration of several modalities. The proposed framework is based on fuzzy aggregations and offers a flexible, plausible, and efficient way for combining multi-modal saliency information. Besides incorporating different modalities, we extend classical 2D saliency maps to multi-camera and multi-modal 3D saliency spaces. For experimental validation we realized the proposed system within a smart environment. The evaluation took place for a demanding setup under real-life conditions, including focus of attention selection for multiple subjects and concurrently active modalities.

|

Keywords: Multi-Camera; 3-D Spatial Saliency; Multi-Modal Saliency; Attention; Active Multi-Camera Control; Volumetric Intersection, Minimal Reconstruction Error; View Selection, Viewpoint Selection; Multi-Modal Sensor Fusion; Fuzzy; Smart Room; Human-Machine Interaction

Download: [ pdf] [ bibtex] [ code #1 - reconstruction error approximation] [ video - view selection]

Selected Related Publications:

|

B. Schauerte, T. Plötz, G. A. Fink, "A Multi-modal Attention System for Smart Environments".

In Proceedings of the 7th International Conference on Computer Vision Systems (ICVS), Lecture Notes in Computer Science, LNCS 5815, pp. 73-83, Springer, Liege, Belgium, October 13-15, 2009.

|

Abstract: Focusing their attention to the most relevant information is a fundamental biological concept, which allows humans to (re-)act rapidly and safely in complex and unfamiliar environments. This principle has successfully been adopted for technical systems where sensory stimuli need to be processed in an efficient and robust way. In this paper a multi-modal attention system for smart environments is described that explicitly respects efficiency and robustness aspects already by its architecture. The system facilitates unconstrained human-machine interaction by integrating multiple sensory information of different modalities.

|

Keywords: Multi-Modal; Multi-Camera; 3-D Spatial Saliency; Attention; Active Multi-Camera Control; Volumetric Intersection; View Selection, Viewpoint Selection; Real-Time Performance; Distributed, Scalable System; Design; Smart Environment, Smart Room; Human-Machine Interaction

Download: [ pdf] [ bibtex] [ video - view selection]

Selected Related Publications:

|

B. Schauerte, "Multi-modale Aufmerksamkeitssteuerung in einer intelligenten Umgebung" (Multi-modal attention control in an intelligent environment).

Diplom (M.Sc.) Thesis, TU Dortmund University, 2008.

|

Abstract: Intelligent environments are supposed to simplify the everyday life of their users. To reach this target, a multitude of sensors is necessary to create a model of the current scene. The complete processing of the resulting sensor data stream can exceed the available processing capacities and inhibit the real-time processing of the complete sensor data. A possible solution to this problem is a fast pre-selection of potentially relevant sensor data and the restriction of complex calculations on the pre-selected sensor data. That leaves the question of what is potentially relevant. [...]

|

Download: [ bibtex]

|

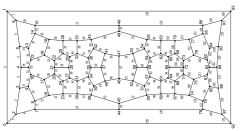

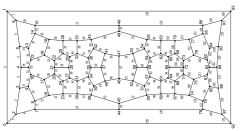

B. Schauerte, C. T. Zamfirescu, "Regular graphs in which every pair of points is missed by some longest cycle".

In Annals of University of Craiova, Volume 33, pp. 154-173, 2006.

|

Abstract: In Petersen's well-known cubic graph every vortex is missed by some longest cycle. Thomassen produced a planar graph with this property. Grünbaum found a cubic graph, in which any two vertices are missed by some longest cycle. In this paper we present a cubic planar graph fulfilling this condition.

|

Download: [ pdf] [ bibtex]

|

B. Schauerte, "Root Treatment - The dangers of rootkits".

In Linux Magazine (UK), Volume 19, pp. 20-23, April, 2002.

|

B. Schauerte, "Feind im Dunkeln - Wie gefährlich sind die Cracker-Werkzeuge".

In Linux Magazin (DE), Volume 3, pp. 44-47, February, 2002.

|

B. Schauerte, "Verborgene Gefahren - Trojanische Pferde in den Kernel laden".

In Linux Magazin (DE), Volume 11, pp. 60-63, October, 2001.

|

|

Copyright Notice: This material is presented to ensure timely dissemination of scholarly and technical work. Copyright and all rights therein are retained by authors or by other copyright holders. All persons copying this information are expected to adhere to the terms and constraints invoked by each author's copyright. In most cases, these works may not be reposted without the explicit permission of the copyright holder.

|

|

Footnotes:

[1] ICMI has been renamed to "International Conference on Multimodal Interaction" in 2011.

|