Computer Vision for Human-Computer Interaction Lab

The CV:HCI lab is part of the Institute for Anthropomatics and Robotics (IAR) of the Department of Computer Science at the Karlsruhe Institute of Technology.

The lab is directed by Prof. Dr.-Ing. Rainer Stiefelhagen who also supervises the KIT Center for Digital Accessibility and Assistive Technolgoy (ACCESS∂KIT). Together with ACCESS@KIT, we develop new assistive technologies for visually impaired people. We also have a close collaboration with the Fraunhofer IOSB in Karlsruhe.

We proudly announce that once again one of our former PhD students, Dr.-Ing. Jiaming Zhang, has won the 17th Doctoral Award in 2023/2024 for his PhD thesis 'Scene Understanding for Intelligent Transportation and Mobility Assistance Systems'

https://www.khys.kit.edu/english/kit-doctoral-award_winners.php

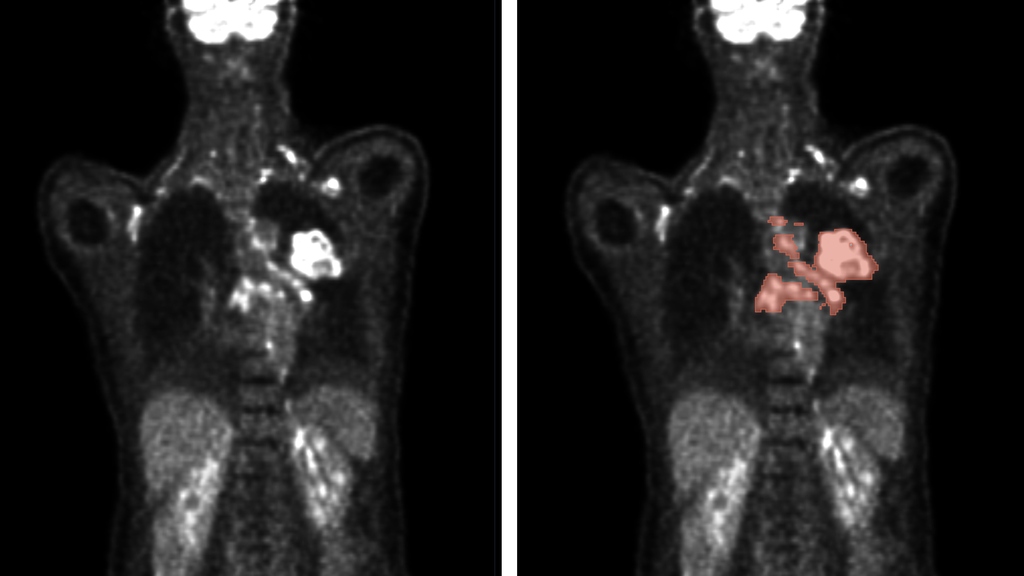

Our PhD student, Zdravko Marinov, teamed up with Prof. Jens Kleesiek's research group at the Institute of Artificial Intelligence for Medicine (IKIM), to secure a spot among the top 5 teams out of 359 registered teams in the autoPET challenge. Learn more about our achievement in our paper published in Nature Machine Intelligence and KIT's press release!

From this summer semester on we offer a new seminar called 'Multimodal Large Language Models'. This seminar is intended to provide students with an up-to-date understanding of technologies behind the recent developments in large multimodal models like GPT4.

Please be also informed that we do not offer any longer our lecture 'Biometric Systems for Person Identification' but there is a last chance to take the oral exam until the end of the summer semester 2024.

We proudly announce that our former PhD student Constantin Seibold has won the 16th KIT Doctoral Award of the year 2022/2023.

In his thesis ‘Towards the automatic generation of medical reports in low supervision scenarios’ Constantin has been working on the automatic evaluation of radiological images and methods for the automatic creation of medical reports. In particular, he investigated whether and how it is possible to learn from limited resources such as medical reports available for almost all radiological images or generate supervision signals from alternative medical imaging domains such as Computer Tomographies. In other words, how readily available data can be used to train image processing methods (deep learning models) to detect pathological structures such as pneumonia or pneumothorax. This would eliminate the need to have many thousands of images manually annotated by experts/doctors for training which is too time-consuming and not scaled. In his work, he was able to show that this is possible.

https://www.khys.kit.edu/promotionspreis_des_kit_preistraeger_innen.php

In August 2023 our lab moved into InformatiKOM II at Adenauerring 10 (bldg. 50.28). You will find us on the first floor.

We have been awarded again for the best practical course in the summer semester 2021. Thanks to all colleagues who contributed!

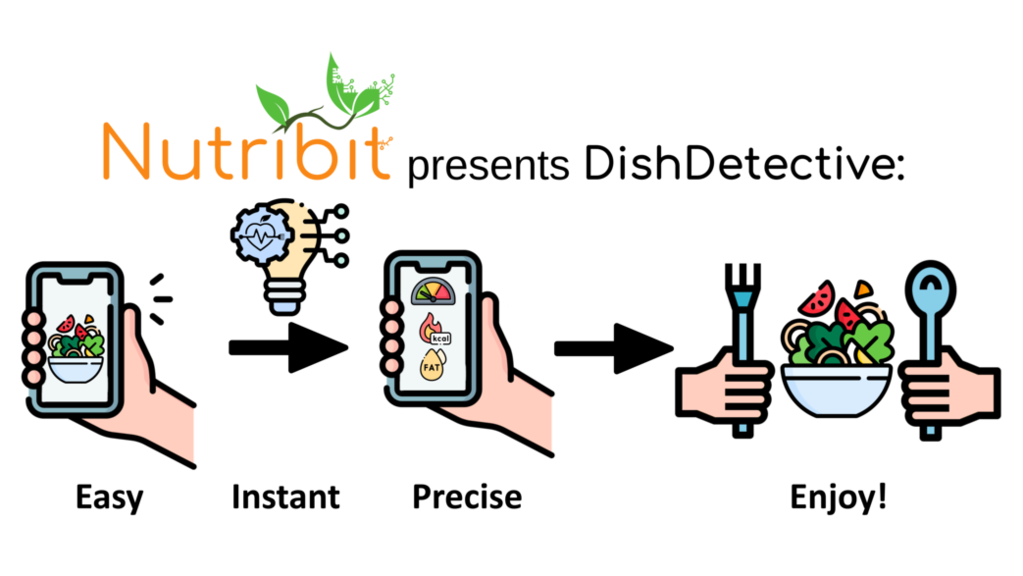

Nutribit, an AI food intelligence startup, is soon introducing their first product: The DishDetective App. The story of Nutribit began in a practical course at cv:hci, and now we are very happy to support them as mentors in their first year as a startup.

With DishDetective, all you have to do is take one picture of your meal, and the app instantly evaluates the meal's nutrients. You don't have to spend time on manual tracking but can start eating right away.

In March 2022, our partner institute - the former Study Center for the Visually Impaired (SZS) - was renamed ACCESS@KIT - Center for Digital Accessibility and Assistive Technology.

The new name illustrates the topics the center has already been dealing with for many years in service, research and teaching: inclusive studying is to be made possible and support systems for people with visual impairments are to be developed.

Our PostDoc Dr.-Ing. Alina Roitberg won another award for her doctoral thesis 'Uncertainty-aware Models for Deep Learning-based Human Activity Recognition and Applications in Intelligent Vehicles'.

With this award, KIT honors outstanding doctoral researchers, thereby highlighting the great importance of young scientists at KIT.

Congratulations!

Dr.-Ing. Alina Roitberg won the second prize of the IEEE Intelligent Transportation Systems Society’s Best PhD Dissertation Award (2021) for her Ph.D. Thesis entitled "Uncertainty-aware Models for Deep Learning-based Human Activity Recognition and Applications in Intelligent Vehicles"

We will offer a new lecture from this winter semester on: 'Deep Learning for Computer Vision - Advanced Topics'

The lecture 'Deep Learning for Computer Vision' will be renamed 'Deep Learning for Computer Vision - Basics' and offered in the summer semester.

There will be no more lectures in 'Computer Vision for Human-Computer Interaction'.

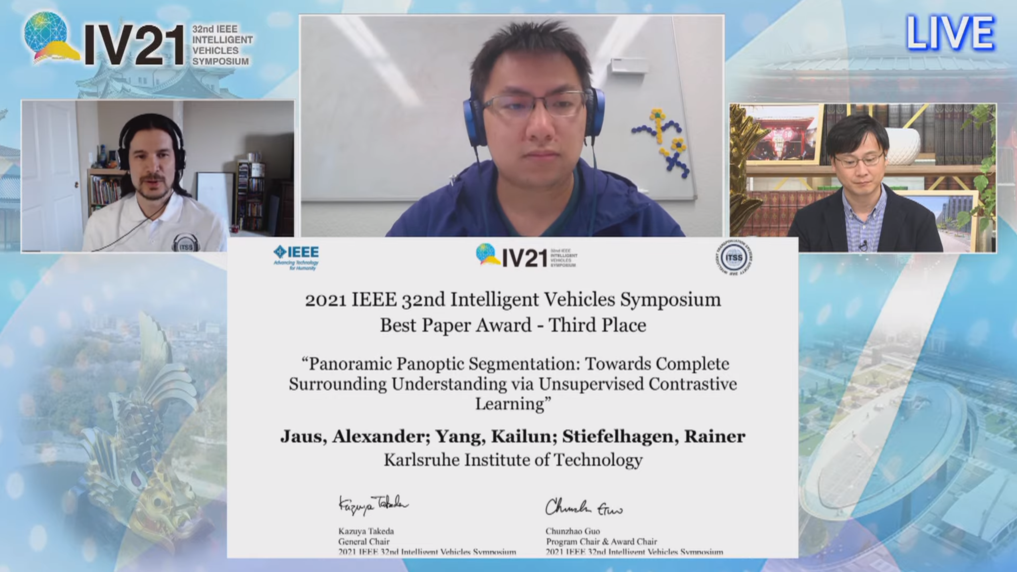

We received the Best Paper Award – Third Place at IV 2021. 2021 - for our paper 'Panoramic Panoptic Segmentation: Towards Complete Surrounding Understanding via Unsupervised Contrastive Learning' by Alexander Jaus, Kailun Yang, Rainer Stiefelhagen.

Routago - formerly called iXpoint - was one of our partners in the BMBF-funded Project TERRAIN. Together we developped a navigation app for visually impaired persons and now they are looking for new investors in Germany's 'Höhle der Löwen'

'Every Annotation Counts: Multi-label Deep Supervision for Medical Image Segmentation'

by S.Reiß, C. Seibold, A. Freytag, E. Rodner, R. Stiefelhagen

'Capturing Omni-Range Context for Omnidirectional Segmentation'

by K. Yang, J. Zhang, S. Reiß, X. Hu, R. Stiefelhagen

'Temporally-Weighted Hierarchical Clustering for Unsupervised Action Segmentation'

by M. S. Sarfraz, N. Murray, V. Sharma, A. Diba, L. Van Gool, R. Stiefelhagen

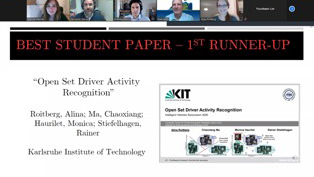

Our paper 'Open Set Driver Activity Recognition' received the Best Student Paper First Runner Up award at the Intelligent Vehicles Symposium 2020. Congratulations to Alina Roitberg, Chaioxang Ma, Monica Haurilet!

We were invited to present our paper by A. Roitberg written in collaboration with M. Martin from Fraunhofer IOSB features Drive&Act, the first large-scale dataset for fine-grained driver activity recognition.

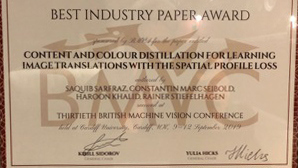

At the 30th British Machine Vision Conference (BMVC) 2019 in Cardiff, our team won again the Best Industry Paper Award for its work on Image Translations with Spatial Profile Loss.

Our work on 'Self-Supervised Learning of Face Representations' received the best paper award at IEEE Automatic Face and Gesture Recognition 2019

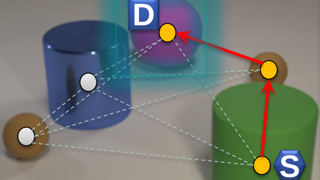

The paper by M. Haurilet et al. presents a novel model based on a graph-traversal scheme for Visual Reasoning.

The paper by M.S. Sarfraz et al. introduces a highly-efficient approach for clustering using first neighbour relations.

The paper by S. Sarfraz et al. presents a novel approach for person re-identification and an unsupervised re-ranking method for retrieval applications.

The paper by V. Sharma et al. presents a novel CNN architecture that can enhance image-specific details via dynamic enhancement filters with the overall all goal to improve classification.

Monica Haurilet and Ziad Al-Halah participated in the textbook question answering challenge and won the first place on the text-based track and came second in the diagram-based track

Our new test lab for a barrier-free access to information for visually imparired persons was inaugurated on 3rd June 2016.

At the 26th British Machine Vision Conference (BMVC) 2015, our team received the best industry paper award for the work on thermal-visible face recognition

Read more

At the 9th International Computer Vision Summer School (ICVSS) 2015, our team member Ziad Al-Halah received the best presentation prize for his work on 'Hierarchical Transfer of Semantic Attributes'.

MIT Technology Review featured an article on our thermal visible face matching work in July 2015.

At the 22nd International Conference on Pattern Recognition (ICPR) Prof. Stiefelhagen's team received the IBM Best Student Paper Award in the Track 'Pattern Recognition and Machine Learning' for the work on "High-Level Semantics in Transfer Metric Learning".

Our research group receives a Google Research Award for its work on "A Mobility and Navigational Aid for Visually Impaired Persons". The "Google Faculty Research Award" is endowed with 83.000 USD for supporting research in computer science, engineering and related disciplines.

The video production team from the Department of Informatics visited our booth at the CeBIT 2013 and recorded a presentation of our demos there.

We received some nice press coverage after CeBIT. Check it out:

- on heute.de

- on itworld.com

Check out our project pages to learn more on our research on face analysis and person identification in multimedia.