Final Reports of the practical course for Computer Vision for Human-Computer-Interaction SS2021

Walkable Path Discovery Utilizing Drones

Haobin Tan, Chang Chen and Xinyu Luo

Lacking the ability to sense ambient environments effectively, blind and visually impaired people face difficulty in walking outdoors, especially in urban areas. Therefore, tools for assisting the visually impaired are of great im portance. In this paper, we propose a novel “flying guidedog” prototype for visually impaired assistance using drone and street view semantic segmentation. Based on the walkable areas extracted from the segmentation prediction, the drone can adjust its movement automatically and thus lead the user to walk along the walkable path. By recognizing the color of pedestrian traffic lights, our prototype can help the user to cross street safely. Furthermore, we introduce a new dataset named Pedestrian and Vehicle Traffic Lights (PVTL), which is dedicated for traffic light recognition. The result of user study in real-world scenarios shows that our prototype is effective and easy to use, providing new insight into visually impaired assistance.

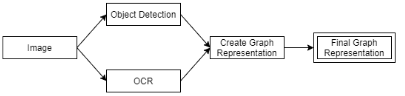

Image Analysis of Structured Visual Content

Maximilian Beichter, Lukas Schölch and Jonas Steinhäuser

Structured visual content such as graphs, flow charts or similar cannot be perceived by visually impaired people and machines. To make this possible, we propose an approach that converts such structured visual content in to a machine-understandable representation. Previous work exists only for very restricted subfields of this task which make strong assumptions about the images. To circumvent these restrictions, we propose an approach using machine learning, which tries to solve the problem for real data by using a synthetic data set. We showed, that this is successful to some degree and discuss the limitations of this approach and propose improvements for future applications. [pdf]

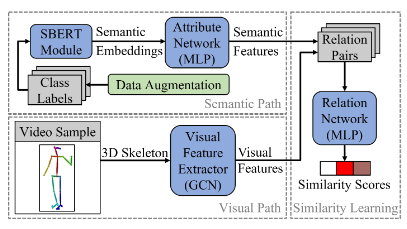

Zero-Shot Gesture Recognition

David Heiming, Hannes Uhl and Jonas Linkerhägner

Interaction with computer systems is one of the most important topics of the digital age. Interfacing with a system through body movements rather than tactile controls can provide significant advantages. To make that possible, the system needs to reliably detect the performed gestures. Systems using conventional deep learning methods are therefore trained on all possible gestures beforehand. Zero-Shot learning models, on the other hand, aim to also recognize gestures not seen during training when given their labels. The model thus needs to extract information about an unseen gesture’s visual appearance from its label. Using typical text embedding modules like BERT, that information will be focused on the semantics of the label rather than its visual characteristics. In this work, we present several forms of data augmentation that can be applied to the semantic embeddings of the class labels in order to increase their visual information content. This approach achieves a significant performance increase for a Zero-Shot gesture recognition model. [pdf]

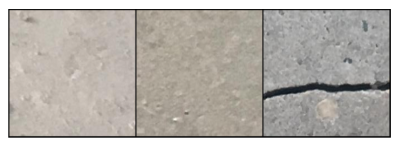

Anomaly Detection in Construction Sites

Martin Tsekov, Patrick Deubel and Lei Wan

Anomaly detection has applications in a variety of domains: network intrusion detection, video surveillance, and medical diagnosis. The main idea of anomaly detection is to model normal behavior and detect deviations from it, e.g. monitoring rarely occurring accidents on a video camera. This paper evaluates reconstruction-based methods [16] and an embedding similarity-based method for anomaly detection on images from construction sites. We show that both types of methods can achieve good classification performance on a clean dataset, but applying them on a noisy dataset results in poor performance. To try to alleviate this issue, two cleaning methods have been applied to the noisy dataset. [pdf]

_compressed%20pdf.png)